Accelerate your AI launch: The power of off-the-shelf datasets

Building a robust artificial intelligence model is a bit like training a high-performance athlete. You can have the best coaching (algorithms) and the best equipment (hardware), but without the right nutrition (data), performance will inevitably suffer. For years, the standard approach to “nutrition” was growing your own ingredients—painstakingly collecting, labeling, and cleaning proprietary data from scratch. While this method offers precision, it is often slow, expensive, and resource-heavy.

But the landscape of AI development is shifting. We are seeing a surge in the availability and quality of off-the-shelf AI datasets—pre-collected, pre-labeled libraries of information ready for immediate deployment. For startups racing to market or enterprises looking to test a proof of concept without burning through their budget, these datasets are changing the equation.

Instead of waiting months for a custom data collection pipeline to mature, developers can now access high-quality structured training data instantly. This shift allows teams to focus on what really matters: refining their models and delivering value to users. Whether you are building a conversational AI for the finance sector or a computer vision model for healthcare, the right pre-built dataset can be the difference between a launch next week and a launch next year.

What are off-the-shelf AI datasets?

Off-the-shelf AI datasets are pre-packaged collections of training data that are ready for immediate purchase and use. Unlike custom data collection, where a vendor sources specific data based on your unique requirements, off-the-shelf options are “stock” items available in a data marketplace.

Think of it as buying a suit. Custom collection is bespoke tailoring—it fits perfectly but takes time and money. Off-the-shelf is buying off the rack—it is instant, more affordable, and with the vast variety available today, you are highly likely to find a near-perfect fit for your needs.

These datasets are typically curated by data experts who ensure the content is annotated, validated, and often compliant with privacy regulations like GDPR or HIPAA. They cover broad use cases, from general speech recognition to niche medical imaging, making them an essential resource for scaling AI systems quickly.

The strategic advantage of pre-built data

Why are so many organizations turning to data marketplaces like Macgence? The advantages go beyond simple convenience.

Speed to market

In the tech industry, speed is a currency. Developing a dataset from scratch—defining requirements, sourcing data, annotating it, and conducting quality assurance—can take months. Off-the-shelf datasets allow you to skip this entire phase. You can download the data and start training your model on the same day. This is particularly crucial for rapid prototyping, where you need to validate an idea before committing significant resources.

Cost efficiency

Custom data collection is labor-intensive. It requires recruiting participants, managing data collectors, and paying for hours of manual annotation. Pre-built datasets amortize these costs across multiple buyers. This means you get access to high-quality, expert-verified data at a fraction of the price of a custom project.

Proven quality and compliance

Reputable data providers invest heavily in quality control. When you purchase a dataset from a trusted marketplace, you are often getting data that has passed rigorous validation checks. Furthermore, ethical sourcing is a major concern in AI today. Top-tier off-the-shelf datasets are typically collected with proper consent and anonymization, mitigating legal risks associated with data privacy.

Exploring the types of available datasets

The variety of data available off-the-shelf is staggering. A quick browse through a comprehensive directory reveals datasets catering to almost every industry. Here are a few key categories you can expect to find:

Speech and Audio

This is one of the most populated categories, essential for training NLP (Natural Language Processing) and conversational AI models.

- Call Center Conversations: These datasets often feature recordings of interactions between agents and customers. For example, you might find specific sets like “Indian Agent to US Customer” conversations tailored for the finance or travel sectors. These are gold mines for training chatbots to understand accents, specific industry terminology, and sentiment.

- General Utterances: These are collections of short phrases or commands used to train voice assistants. They are available in a multitude of languages, from Dutch to Hindi, ensuring your model can serve a global audience.

- Medical Speech: Specialized audio datasets, such as patient-doctor conversations, help in developing transcription tools for healthcare professionals.

Computer Vision (Images and Video)

Visual data is critical for autonomous systems and diagnostic tools.

- Medical Imaging: High-stakes fields need high-quality data. Marketplaces offer datasets containing MRI scans of different body parts or dermatological images (like skin conditions) to train diagnostic support tools.

- Document OCR: To train AI to read paperwork, you need examples. Datasets of bank statements (from the UK, USA, etc.) are commonly used to teach models how to extract text from structured documents.

- Video Scenarios: Training autonomous vehicles or security systems requires dynamic data. You can find video datasets of construction sites for safety monitoring or dashcam footage for driver assistance systems.

Text and Chatbot Data

For text-based AI, volume and variety are key.

- Chatbot Logs: Massive logs of customer service interactions in sectors like Ecommerce or BFSI (Banking, Financial Services, and Insurance). These help models learn the flow of conversation and how to resolve queries effectively.

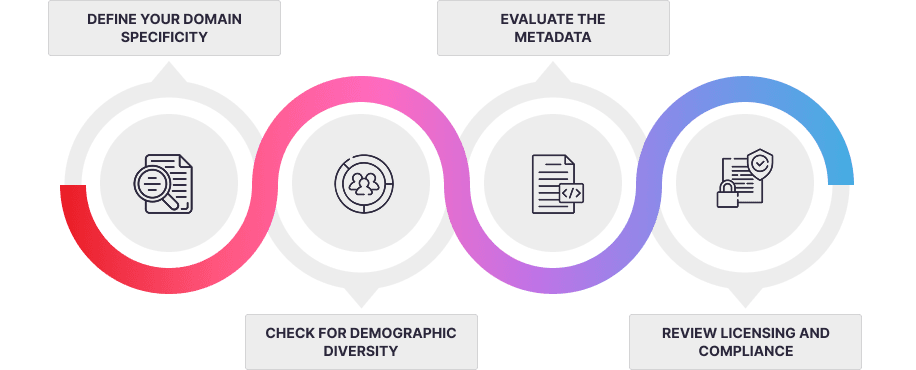

How to choose the right dataset for your project

With so many options, selecting the right dataset requires a strategic approach. It isn’t just about grabbing the largest file available; it’s about relevance.

1. Define your domain specificity

Does the data match your specific use case? If you are building a customer support bot for an American bank, a dataset of general casual conversation won’t be enough. You need financial context. Look for datasets labeled with specific verticals, such as “Finance” or “Travel,” to ensure the terminology matches your deployment environment.

2. Check for demographic diversity

AI bias often stems from homogeneous training data. If your voice assistant needs to understand English speakers globally, training it solely on American accents is a recipe for failure. Look for datasets that explicitly state the demographics, such as “Indian Agent to US Customer” or specific regional dialects. This ensures your model is robust and inclusive.

3. Evaluate the metadata

Good data comes with good documentation. Before purchasing, check what metadata is included. For audio, does it include information about the speaker’s age, gender, and recording environment? For images, are the lighting conditions and resolutions specified? detailed metadata allows for more nuanced model training.

4. Review licensing and compliance

Never overlook the legalities. Ensure the dataset comes with a clear license that permits commercial use. If you are dealing with personal data (like medical images or financial records), verify that the provider adheres to data privacy laws and that all PII (Personally Identifiable Information) has been redacted or anonymized.

Potential challenges to watch out for

While off-the-shelf AI datasets are powerful, they are not a magic wand. There are considerations to keep in mind to ensure success.

Static nature of data: Pre-built datasets represent a snapshot in time. Language evolves, slang changes, and visual environments shift. If you buy a dataset from five years ago, it might not reflect current realities. It is often wise to combine off-the-shelf data with a smaller stream of fresh, custom-collected data to keep your model current.

Generic vs. Niche: Sometimes, your problem is truly unique. If you are building a model to detect defects in a brand-new, proprietary manufacturing part, you likely won’t find that data on a marketplace. In these cases, off-the-shelf data can serve as a foundation for “transfer learning,” where you pre-train a model on generic data and fine-tune it with a small amount of custom data.

Real-world applications of pre-built data

The practical application of these datasets is driving innovation across industries.

- Healthcare Diagnostics: Startups are using off-the-shelf libraries of MRI and X-ray images to build AI assistants that help radiologists detect anomalies faster. By starting with a massive library of pre-labeled scans, they can achieve high accuracy without needing to partner with hospitals for years of data collection first.

- Fintech Customer Service: Banks are deploying voice bots capable of handling complex queries about mortgages and credit cards. They achieve this by training their models on thousands of hours of pre-recorded financial call center conversations, allowing the AI to learn the nuances of banking dialogue immediately.

- Autonomous Safety Systems: Construction companies are using video datasets of worksites to train cameras that detect safety violations, such as workers not wearing helmets. Buying existing footage of construction sites accelerates the deployment of these life-saving tools.

The future of AI is data-accessible

The democratization of AI relies heavily on the democratization of data. As we move forward, the ability to easily access high-quality, ethical, and diverse training data will become the standard for development.

Off-the-shelf AI datasets are no longer just a shortcut; they are a strategic asset. They allow businesses to prototype rapidly, reduce entry barriers, and ensure their models are trained on diverse, compliant foundations.

If you are ready to accelerate your AI development, don’t start from square one. Explore the Macgence Data Marketplace to find the specific audio, video, image, and text datasets that will power your next innovation.

You Might Like

February 18, 2026

Prebuilt vs Custom AI Training Datasets: Which One Should You Choose?

Data is the fuel that powers artificial intelligence. But just like premium fuel vs. regular unleaded makes a difference in a high-performance engine, the type of data you feed your AI model dictates how well it runs. The global market for AI training datasets is booming, with companies offering everything from generic image libraries to […]

February 17, 2026

Building an AI Dataset? Here’s the Real Timeline Breakdown

We often hear that data is the new oil, but raw data is actually more like crude oil. It’s valuable, but you can’t put it directly into the engine. It needs to be refined. In the world of artificial intelligence, that refinement process is the creation of high-quality datasets. AI models are only as good […]

February 16, 2026

The Hidden Cost of Poorly Labeled Data in Production AI Systems

When an AI system fails in production, the immediate instinct is to blame the model architecture. Teams scramble to tweak hyperparameters, add layers, or switch algorithms entirely. But more often than not, the culprit isn’t the code—it’s the data used to teach it. While companies pour resources into hiring top-tier data scientists and acquiring expensive […]