- What Does “High-Quality AI Dataset” Really Mean?

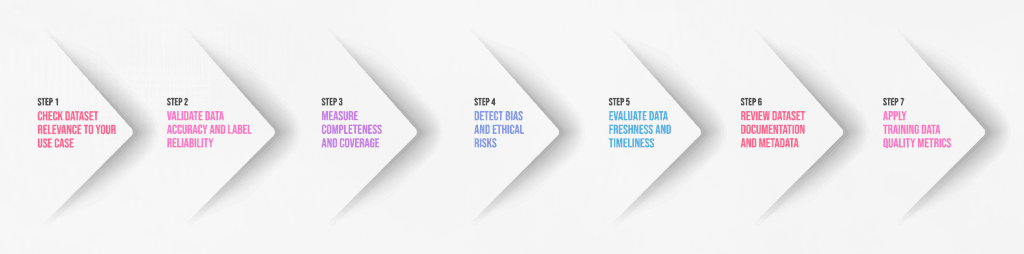

- Step 1: Check Dataset Relevance to Your Use Case

- Step 2: Validate Data Accuracy and Label Reliability

- Step 3: Measure Completeness and Coverage

- Step 4: Detect Bias and Ethical Risks

- Step 5: Evaluate Data Freshness and Timeliness

- Step 6: Review Dataset Documentation and Metadata

- Step 7: Apply Training Data Quality Metrics

- Common Red Flags When Evaluating AI Datasets

- Build vs Buy: Why Dataset Marketplaces Reduce Risk

- Practical Checklist: How to Evaluate an AI Dataset Before Training

- AI Dataset Quality Is a Strategic Decision

How to Evaluate an AI Dataset Before Using It for Training

It’s a common misconception in the world of artificial intelligence: if the model isn’t performing well, we need a better algorithm. In reality, the issue rarely lies with the architecture itself. The bottleneck is almost always the data.

You can have the most sophisticated neural network available, but if it learns from flawed examples, the output will be flawed. This phenomenon—often summarized as “garbage in, garbage out”—leads to real-world consequences. We’ve all seen the headlines about AI hallucinations, biased hiring algorithms, or self-driving cars misinterpreting street signs. These aren’t just coding errors; they are failures in AI dataset quality.

Evaluating your data isn’t just a technical step; it’s a strategic necessity. Whether you are building a computer vision model for autonomous vehicles or a chatbot for customer service, the integrity of your training data dictates the success of your deployment. This guide will walk you through the essential steps to evaluate dataset quality before you invest time and resources into training.

What Does “High-Quality AI Dataset” Really Mean?

Before we can evaluate a dataset, we need to define what we are looking for. AI dataset quality isn’t an abstract concept; it is a measurable characteristic defined by four key pillars:

- Accuracy: Does the data truthfully represent the real world?

- Relevance: Is the data applicable to the specific problem you are solving?

- Coverage: Does the dataset account for edge cases and variety?

- Consistency: Are the labels and formats uniform throughout the file?

It is also crucial to distinguish between raw data and training-ready data. A folder full of thousands of unlabeled images is raw data. While valuable, it is not “high quality” in the context of supervised learning until it has been annotated, validated, and structured. To objectively determine if a dataset is ready, we rely on specific training data quality metrics, which move us away from gut feelings and toward data-driven decisions.

Step 1: Check Dataset Relevance to Your Use Case

The first step in evaluation is ensuring the data actually fits your specific needs. You might find a massive, clean dataset of conversation logs, but if your goal is to build a chatbot for legal advice and the dataset is from Reddit, the domain mismatch will lead to failure.

Ask yourself:

- Does the domain match? If you are building a medical diagnostic tool, general healthcare data isn’t enough; you need specific data relevant to the pathology you are detecting.

- Does it reflect real-world conditions? If you are training a voice recognition system for a noisy factory floor, a dataset recorded in a soundproof studio will not perform well in deployment.

Using irrelevant data introduces significant risk. The model might achieve high accuracy during testing on that specific dataset, but it will fail when exposed to the nuances of your actual user environment. AI dataset quality starts with relevance—if the context is wrong, the quality of the labels doesn’t matter.

Step 2: Validate Data Accuracy and Label Reliability

Once you’ve established relevance, you must verify that the information is correct. In supervised learning, the labels are the “ground truth.” If the truth is wrong, the model learns a lie.

You can assess this by performing dataset validation on a sample subset. You don’t need to check every single row, but a statistically significant random sample should be manually reviewed.

- Spot-check annotations: Are the bounding boxes tight around the objects? Is the text transcription 100% accurate?

- Check for inter-annotator agreement: If multiple humans labeled the data, did they agree? Low agreement usually indicates that the labeling instructions were ambiguous.

Whether you use human annotators or automated labeling tools, errors will creep in. Validation acts as a quality gate, ensuring that bad labels don’t degrade your model’s performance.

Step 3: Measure Completeness and Coverage

A high-quality dataset must be representative of the entire problem space, not just the “easy” examples. “Coverage” refers to how well the data spans the diversity of the real world.

For example, a self-driving car dataset that only contains footage from sunny days has poor coverage. It will likely fail the moment it rains. To evaluate this, look at training data quality metrics regarding class distribution.

- Class Balance: Do you have 10,000 images of cats but only 100 of dogs? This imbalance will cause the model to overfit, favoring the majority class.

- Missing Values: Are there critical data points left blank?

If your dataset is too narrow, your AI will be brittle. It might perform exceptionally well in controlled tests but fail to generalize when faced with edge cases or unexpected variables in production.

Step 4: Detect Bias and Ethical Risks

Bias in AI is often unintended, stemming from historical prejudices or sampling errors within the dataset. However, the legal and reputational damage it causes is very real.

You must actively screen for:

- Demographic Bias: Does the dataset underrepresent certain genders, ethnicities, or age groups?

- Sampling Bias: Was the data collected from a single geographic location that doesn’t represent your global user base?

Evaluating for bias involves comparing the distribution of your data against the distribution of the real-world population you intend to serve. Identifying these gaps early allows you to correct them via augmentation or re-sampling. Ignoring this step directly degrades AI dataset quality and can lead to unfair or discriminatory model behaviors.

Step 5: Evaluate Data Freshness and Timeliness

Data has a shelf life. Language evolves, consumer behaviors shift, and visual environments change. Using stale data can result in “concept drift,” where the model’s training no longer applies to the current reality.

This is critical for specific use cases:

- Fraud Detection: Scammers constantly change their tactics. Data from five years ago won’t catch today’s fraud.

- NLP: Slang and terminology change rapidly. A sentiment analysis model trained on 2010 tweets might misunderstand 2024 internet culture.

Always ask: When was this dataset last updated? Is it a static dump from a specific year, or is it part of a pipeline that is continuously refreshed?

Step 6: Review Dataset Documentation and Metadata

You should never have to guess where your data came from. High-quality datasets come with comprehensive documentation—often called a “datasheet” or “model card.”

Good documentation provides transparency into:

- Collection Methods: How was the data sourced? Was it scraped, crowdsourced, or synthetic?

- Annotation Guidelines: What instructions were given to the labelers? This helps you understand how subjective cases were handled.

- Known Limitations: Honest providers will list what the dataset doesn’t cover.

If a dataset lacks metadata or clear documentation, treat it with skepticism. Without this context, dataset validation becomes a guessing game.

Step 7: Apply Training Data Quality Metrics

Finally, move beyond qualitative checks and apply quantitative training data quality metrics. These are objective numbers that help you compare different datasets.

Key metrics include:

- Label Accuracy Rate: The percentage of labels in your sample set that are correct.

- Noise Level: The amount of irrelevant or corrupted data.

- Duplicate Rate: Repeated data points can artificially inflate test accuracy without improving learning.

By quantifying these factors, you can make an apples-to-apples comparison between an open-source dataset and a vendor-supplied one.

Common Red Flags When Evaluating AI Datasets

As you go through this evaluation process, keep an eye out for these immediate warning signs. If you see them, proceed with extreme caution:

- No Annotation Guidelines: If the provider can’t show you the rules used to label the data, the labels are likely inconsistent.

- Unknown Data Source: “Black box” data can harbor legal liabilities regarding copyright and privacy.

- Extremely Cheap “Bulk” Datasets: Quality annotation requires human effort and expertise. If the price seems too good to be true, the quality usually is.

- No Validation Process: If the provider hasn’t validated the data themselves, they are passing that labor and risk onto you.

These red flags are strong indicators of poor AI dataset quality, which will inevitably cost you more in re-training and debugging than you saved on the data purchase.

Build vs Buy: Why Dataset Marketplaces Reduce Risk

After evaluating the criteria above, many teams realize that collecting and cleaning data in-house is a massive undertaking. It requires building scraping tools, managing annotation teams, and building validation pipelines.

This is where trusted data partners come in. Using a curated source like the Macgence Data Marketplace allows you to skip the risky collection phase. Marketplace datasets are typically:

- Pre-validated: The quality checks and metrics are already established.

- Domain-Specific: You can find specialized data for healthcare, automotive, or finance without starting from scratch.

- Faster to Deploy: You buy the data and start training immediately.

Whether you choose to build your own or buy from a marketplace, the key is ensuring the source is trusted and transparent.

Practical Checklist: How to Evaluate an AI Dataset Before Training

Before you hit “train,” run your dataset through this final checklist:

- Relevance: Is the dataset relevant to my specific task and domain?

- Validation: Has dataset validation been performed on a sample set?

- Accuracy: Are the labels accurate, and is the inter-annotator agreement high?

- Coverage: Does the dataset cover edge cases and maintain class balance?

- Bias Check: Have demographic and sampling biases been identified and mitigated?

- Metrics: Are training data quality metrics available and within acceptable ranges?

- Documentation: Is there clear documentation regarding source and licensing?

AI Dataset Quality Is a Strategic Decision

The performance of your AI is a direct reflection of the data it consumes. Skimping on evaluation doesn’t speed up development; it creates technical debt that you will have to pay off later with re-training and patches.

By prioritizing AI dataset quality—through rigorous validation, objective metrics, and relevance checks—you ensure a higher ROI for your AI initiatives. Don’t just trust the file size; verify the contents.

Ready to find data you can trust? Explore verified, high-quality datasets on the Macgence Data Marketplace today.

You Might Like

February 18, 2026

Prebuilt vs Custom AI Training Datasets: Which One Should You Choose?

Data is the fuel that powers artificial intelligence. But just like premium fuel vs. regular unleaded makes a difference in a high-performance engine, the type of data you feed your AI model dictates how well it runs. The global market for AI training datasets is booming, with companies offering everything from generic image libraries to […]

February 17, 2026

Building an AI Dataset? Here’s the Real Timeline Breakdown

We often hear that data is the new oil, but raw data is actually more like crude oil. It’s valuable, but you can’t put it directly into the engine. It needs to be refined. In the world of artificial intelligence, that refinement process is the creation of high-quality datasets. AI models are only as good […]

February 16, 2026

The Hidden Cost of Poorly Labeled Data in Production AI Systems

When an AI system fails in production, the immediate instinct is to blame the model architecture. Teams scramble to tweak hyperparameters, add layers, or switch algorithms entirely. But more often than not, the culprit isn’t the code—it’s the data used to teach it. While companies pour resources into hiring top-tier data scientists and acquiring expensive […]