AI Training Data Solutions: What’s Changing in 2025?

The quality of your model depends on the quality of the data it is trained on in the ever changing field of artificial intelligence. Although algorithms may receive more attention, the cornerstone of every effective AI solution is training data. Well-labeled, diversified, and high-quality datasets are the unsung heroes driving innovation, from allowing real-time language translation to powering self-driving automobiles. The significance of AI training data solutions, the difficulties in locating and preparing it, and the solutions that are assisting companies and developers in realizing the full potential of machine learning will be covered in this blog.

What Is AI Training Data Solutions?

The fundamental information required to educate a machine learning model how to identify patterns, make choices, and get better over time is known as AI training data solutions. An AI system learns from the data it is exposed to, much as a person learns from experience. Any AI model’s intelligence is shaped by its training data, whether it is used to recognize items in an image, comprehend spoken language, or forecast customer behavior.

Meaning and Objective

The input that machine learning algorithms receive during the learning phase is known as training data. Examples with labels or known results (for supervised learning) or unlabeled raw inputs (for unsupervised learning) are included. The model’s objective is to examine this data, identify the underlying trends, and use that understanding to make accurate predictions or decisions when faced with new, unseen data.

The purpose of training data is to:

- Help the model recognize patterns or relationships in the data.

- Tune internal parameters (like weights in neural networks).

- Minimize errors in prediction by comparing outputs with known results.

- Improve the model’s performance over iterations.

Without training data, no amount of advanced algorithms or computing power can result in a functional AI system.

AI Training Data Types

Depending on the use case, AI training data can take many different formats. It often fits into one of the following groups:

Structured Data

Structured and arranged data, typically kept in databases or spreadsheets with rows and columns, includes time-series data, customer information, and sales records, for example.

Unstructured Data

Raw, disorganized information that doesn’t adhere to a set format is known as unstructured data. Text, pictures, sound, and video are all included in this. The majority of data in the actual world is unstructured and needs to be preprocessed before it can be used.

Labeled Data

data that has tags or annotations that point to particular characteristics or results. An picture with the caption “cat,” for instance, teaches a model what a cat looks like. In tasks involving supervised learning, this is crucial.

Unlabeled Data

information without annotations. In unsupervised learning, when the model is allowed to discover patterns on its own, it is frequently utilized. Although unlabeled data is increasingly prevalent, it usually has to be manually tagged or subjected to more intricate algorithms in order to be usable.

Each kind has a distinct function in AI system training, and the success of an AI project may be greatly impacted by the choice of data type and the maintenance of data quality.

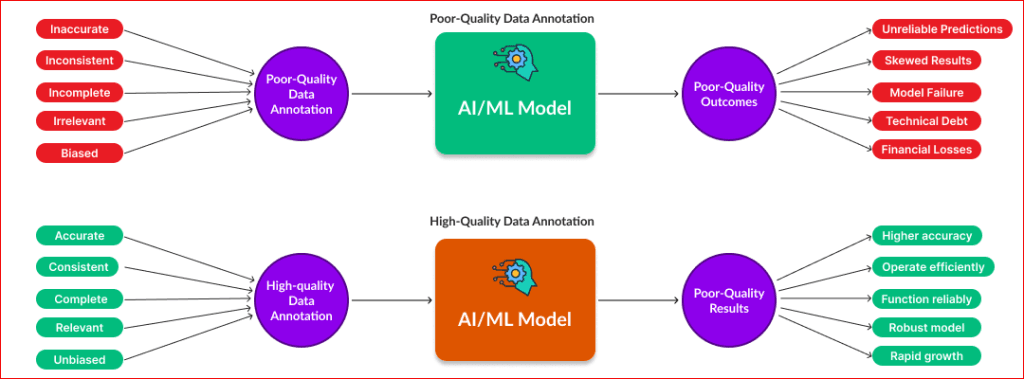

Why Quality Training Data Matters

“Garbage in, garbage out” is a famous saying in the world of AI. This idea sums up the vital significance of high-quality training data rather well. If an algorithm is trained on faulty, biased, or poor-quality data, the outputs will be just as erroneous, regardless of how sophisticated the algorithm is. Any AI model is constructed on training data, and like any foundation, the strength of the subsequent components depends on how well the training data is maintained.

“Garbage In, Garbage Out” Principle

By finding patterns in data, AI models acquire knowledge. However, the model may also pick up irrelevant, biased, inconsistent, or incomplete patterns from the input data. As a result, misclassifications, poor decision-making, and inaccurate results can occur. In other words, the caliber of the data your AI system uses ultimately determines how good it is.

Impact on Model Bias, Accuracy, and Generalization

- Bias:

Results can be extremely skewed if the data used to train an AI model does not truly reflect everyone. A face recognition system that has been mostly trained on images of persons with lighter skin tones is one example. - Accuracy:

Even little labeling errors or noisy data might significantly lower a model’s accuracy. AI systems depend on accuracy; errors in text, picture labeling, or voice quality can all cause problems. - Generalization:

Building models with good generalization—that is, the ability to function properly on data they haven’t seen before—is a major objective in AI. When models are trained on limited or repeated datasets, they may overfit, performing well on training data but poorly in real-world scenarios.

Real-World Examples of Poor Data Leading to Failed AI Outcomes

- Microsoft’s Tay Chatbot (2016):

Designed to learn from Twitter users, Tay quickly began tweeting offensive and racist content. This happened because it learned from the toxic data it was fed, thereby demonstrating how vulnerable AI is to low-quality or manipulated inputs. - Amazon’s AI Hiring Tool:

Amazon scrapped an internal AI recruiting tool after discovering it discriminated against female candidates. The model had been trained on ten years of resumes, mostly from men, reflecting past hiring biases and inadvertently learning to penalize resumes with female-related terms. - Healthcare AI Misdiagnoses:

Some AI tools used in healthcare have underperformed for minority groups because the training data lacked sufficient representation. This raises serious concerns about fairness, trust, and patient safety.

Common Challenges in Training Data Collection

Although gathering training data may seem simple, there are really a number of difficulties involved. Every stage needs careful consideration, from locating the appropriate data to ensuring that it is appropriately labeled and sourced responsibly. The following are a few of the most typical obstacles that organizations encounter:

1. Lack of Data

Many AI projects lack access to large, pre-made datasets. In several fields, including healthcare, robotics, and specialized manufacturing, pertinent data might be extremely rare or hard to obtain. When there are not enough examples for models to learn from, they struggle to identify patterns or make accurate predictions. Development is usually slowed down by this lack of data, or teams are forced to employ produced or false data.

2. Privacy, Ethics and Regulations

Privacy is an important issue when data concerns actual persons. Personal images, social media posts, and medical information are examples of things that cannot be utilized without restriction. Businesses must get the necessary authorizations, abide by stringent regulations (such as GDPR), and ensure that their data collection and usage practices are morally and respectfully done.

3. Inconsistent Labels

Clear and accurate data labels are necessary for AI to learn effectively. However, human error occurs when labeling is done. One individual may identify a picture as “dog,” while another would tag it as “puppy.” Mistakes like this might make the model confused. Labeling correctly and consistently is essential, but it’s not always simple.

4. Odd Situations That Don’t Follow the Trend (Edge Cases)

AI often fails in unexpected situations that it has never encountered before, like a self-driving car colliding with a camel in the city! These rare events, referred to as edge situations, are challenging to predict yet essential to handle. If they are not there in the real world, the model won’t know how to react.

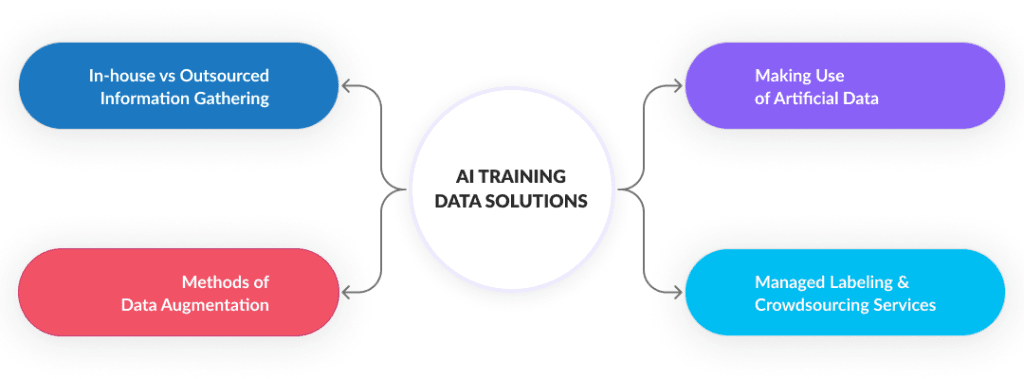

AI Training Data Solutions: An Overview

Knowing how difficult it may be to gather training data, let’s examine some clever fixes. There are several approaches to addressing the data dilemma, whether you’re creating an AI model from the ground up or refining an already-existing one. Here are some of the most often used methods, which range from doing things alone to asking for group assistance:

1. In-house vs Outsourced Information Gathering

Some businesses would rather gather and classify data internally, particularly when handling sensitive data or special business requirements. They now have complete authority over privacy and quality.

Conversely, using professional suppliers to handle data collecting can save money, time, and effort. These professionals frequently possess the resources and know-how to expand more quickly and manage challenging labeling jobs. The decision is mostly based on the size, budget, and control requirements of your project.

2. Methods of Data Augmentation

When you can produce more with what you currently have, why gather more data? By producing slightly altered versions of preexisting data, data augmentation is similar to adding value to your dataset. For instance, adding noise, altering lighting, or flipping or rotating pictures. This improves your model’s learning and generalization capabilities without requiring a ton of fresh data.

3. Making Use of Artificial Data

Real-world data can either be too difficult to obtain or too private to utilize. Synthetic data can help with that. This data was created by a machine and simulates actual situations. Companies that make self-driving cars, for instance, create traffic scenarios using 3D simulations. It is secure, scalable, and frequently less expensive than gathering actual data, particularly in uncommon or dangerous circumstances.

4. Managed Labeling and Crowdsourcing Services

Do you need a lot of data tagged fast? Using crowdsourcing systems, you may annotate data at scale by connecting with a worldwide network of workers. It’s quick and economical, but if not properly controlled, quality may suffer.

For enhanced accuracy and quality control, businesses leverage managed labeling services—specialized teams that operate under strict quality assurance protocols. This solution is particularly suited for high-complexity use cases such as medical image annotation and linguistically nuanced data labeling.

Emerging Technologies and Trends in AI Training Data Solutions

- AI Creating Its Own Training Data

- Self‑supervised learning: Models learn by predicting parts of their own input—like guessing a missing word in a sentence—so they need far fewer human‑labeled examples.

- Generative models (GANs, diffusion models): These systems can synthesize realistic images, text, audio, and more, providing extra data when real samples are scarce or sensitive.

- Rise of the Data‑Centric AI Movement

- Attention is shifting from endlessly tweaking algorithms to carefully improving the data itself.

- Cleaner, more diverse, and well‑documented datasets are proving to boost performance more reliably than marginal model changes.

- This approach produces AI that is more robust, with fewer hidden biases.

- Automated Data Labeling Tools and Platforms

- AI‑assisted platforms now pre‑label easy cases, letting humans focus on the tricky ones—speeding up projects and cutting errors.

- Many annotation platforms incorporate active learning, a technique where the model identifies high-uncertainty samples for human review, thereby maximizing the impact of each labeled instance.

- End‑to‑end solutions handle quality checks, version control, and workflow management, freeing teams to concentrate on model development.

Best Practices for Managing Training Data

- Ensure Diversity and Representativeness

- Provide a variety of examples that represent the diversity seen in the actual world, such as various places, languages, circumstances, and populations.

- A balanced dataset enhances performance across various user groups and edge cases while lowering model bias.

- Implement Data Quality Checks

- Regularly audit datasets for errors, inconsistencies, and outdated information.

- Use validation tools to check label accuracy, completeness, and relevance.

- Set up feedback loops to improve data quality over time.

- Maintain Version Control and Documentation

- Track changes in datasets just like you would with code—keep records of versions, sources, and changes made.

- Document labeling guidelines, annotation tools used, and any assumptions or edge case decisions.

- Good documentation makes debugging and collaboration easier and more transparent.

- Ensure Compliance with Data Regulations

- Get the right consent, protect sensitive data, and handle data storage.

- To minimize compliance risks, be informed about changing rules.

Conclusion

Data serves as more than simply fuel in the field of artificial intelligence; it is the cornerstone of strong, trustworthy, and moral models. It is impossible to overestimate the significance of high-quality training data as AI continues to transform daily life and influence businesses. Organizations must implement deliberate, data-centric tactics to guarantee success, from addressing bias and data shortages to utilizing synthetic datasets and automated labeling technologies. Because smarter AI starts with smarter data, our dedication to clean, varied, and responsibly sourced data must advance along with technology. Intelligent results tomorrow are made possible by investing in the appropriate training data now.

FAQs

Ans: – Machine learning models are taught to see patterns, make decisions, and perform better using AI training data solutions.

Ans: – While bad data produces faulty findings, high-quality data guarantees precise, objective, and broadly applicable AI outputs.

Ans: – Common difficulties include addressing edge circumstances, uneven labeling, privacy concerns, and a lack of data.

Ans: – In simple terms, creating altered copies of current data, in order to enhance model learning without the need for gathering new data, is known as data augmentation.

Ans: – When obtaining genuine data is difficult or raises privacy concerns, then in that case, synthetic data is frequently utilized to replicate real-world situations.

You Might Like

July 24, 2025

Transform Your Data: Classification & Indexing with Macgence

In an AI‑driven world, the quality of your models depends entirely on the data you feed them. People tend to focus on optimising model architecture, reducing the time of training without degradation of accuracy, as well as the computational cost. However, they overlook the most important part of their LLMs or AI solution, which is […]

July 22, 2025

Stress Test Your AI: Professional Hallucination Testing Services

In the age of LLMs and gen AI, performance is no longer just output—it’s about “trust”. One of the biggest threats to that trust? Hallucinations. These seemingly confident but factually incorrect outputs can lead to misinformation, massive brand damage, which can cause millions, compliance violations, which can cause legal issues, and even product failure. That’s […]

July 21, 2025

How Smart LLM Prompting Drives Your Tailored AI Solutions

In today’s AI world, every business increasingly relies on LLMs for automating content creation, customer support, lead generation, and more. But one crucial factor people tend to ignore, i.e., LLM Prompting. Poorly crafted prompts result in hallucinations or sycophancy—even with the most advanced models. You might get chatty copy but not conversions, or a generic […]