- What Exactly Is a Multimodal Conversations Datasets?

- Why Multimodal Conversations Datasets Are Essential for Modern AI

- The Challenge: Why Multimodal Conversations Datasets Are Scarce

- How Macgence Solves the Multimodal Conversations Dataset Challenge

- Real-World Results: Multimodal Conversations Datasets in Action

- Why Partner with Macgence for Your Multimodal Conversations Dataset Needs

- Getting Started: Your Path to Better Multimodal Conversational AI

- Conclusion: Multimodal Conversations Datasets Are Your Competitive Advantage

Why Your AI Can’t Understand Humans: The Multimodal Conversations Datasets Gap

Your conversational AI is failing, and you probably don’t know why. It responds to words perfectly. The grammar checks out. The speed is impressive. But somehow, it keeps missing what users actually mean. The frustrated customers. The sarcastic feedback. The urgent requests are buried in casual language.

Here’s what’s really happening: your AI is reading words but missing the conversation.

Think about the last meaningful conversation you had. You didn’t just process words, right? You noticed the slight hesitation before someone answered. The way their voice softened when discussing something personal. The micro-expressions that told you more than their words ever could.

That’s human communication in its natural form—layered, nuanced, multimodal.

And that’s exactly what multimodal conversations datasets capture. These aren’t your typical text transcripts. They’re comprehensive recordings of how humans actually communicate, combining text, audio, video, gestures, and emotional cues into training data that teaches AI to understand conversations the way humans do.

Without multimodal conversation datasets, you’re essentially teaching your AI to navigate human interaction while blindfolded. And in today’s AI landscape, that’s a competitive disadvantage you can’t afford.

At Macgence, we’ve spent over five years helping AI companies build conversational systems that actually understand humans. Through our work with 200+ organizations, we’ve seen firsthand how the right multimodal conversations dataset transforms struggling AI into exceptional systems.

Let’s explore what makes these datasets so critical—and how we help companies like yours access them.

What Exactly Is a Multimodal Conversations Datasets?

A multimodal conversations dataset is a structured collection of real human dialogues captured across multiple communication channels simultaneously. Instead of just recording what people say, these datasets capture how they say it, what they look like while saying it, and the context surrounding the entire interaction.

Imagine a customer calling tech support. A traditional dataset captures the transcript. But a multimodal conversation dataset captures:

- The exact words spoken (text transcription)

- How they were spoken (audio with tone, pitch, emotion, pacing)

- Visual communication (facial expressions, gestures, body language if video)

- Temporal dynamics (pauses, interruptions, turn-taking patterns)

- Contextual metadata (background noise, speaker demographics, conversation purpose)

- Emotional annotations (frustration, satisfaction, confusion at each turn)

This comprehensive capture creates training data that reflects the full complexity of human conversation. And that complexity is exactly what modern AI systems need to perform well in real-world applications.

Research consistently demonstrates the value here. Studies show that AI models trained on multimodal conversation datasets achieve 35-45% better accuracy in understanding user intent compared to text-only trained models. For emotion recognition tasks, the improvement jumps to nearly 60%.

The Anatomy of Quality Multimodal Conversations Dataset

Not all multimodal conversation datasets are created equal, though. High-quality datasets share several critical characteristics:

- Synchronized Multi-Channel Recording

All modalities must be perfectly time-aligned. The audio timestamp needs to match the video frame, which needs to match the transcript word. Even a 100-millisecond misalignment can corrupt the learning process, teaching AI to associate the wrong facial expression with the wrong words.

- Rich Annotation Layers

Raw recordings aren’t enough. Quality datasets include expert annotations marking:

- Speaker emotions at the utterance level

- Conversational intent for each turn

- Discourse relationships between statements

- Turn-taking dynamics and interruption patterns

- Non-verbal cues and their meanings

- Diverse Representativeness

Effective datasets capture conversations across demographics, accents, dialects, and communication styles. An AI trained only on young American English speakers will struggle with elderly British users or non-native speakers.

- Domain Relevance

Generic conversations teach generic patterns. If you’re building healthcare AI, you need medical consultation conversations. For customer service AI, you need actual support interactions. Domain-specific multimodal conversation datasets dramatically reduce training time and improve accuracy.

- Ethical Collection and Privacy Compliance

All participants must provide informed consent. Personal information must be protected. GDPR, HIPAA, and other regulations must be followed rigorously. At Macgence, we ensure every dataset meets stringent privacy standards before it reaches your team.

Why Multimodal Conversations Datasets Are Essential for Modern AI

The conversational AI landscape has fundamentally changed. Users expect natural, context-aware interactions. They expect AI to understand not just their requests, but the urgency, emotion, and nuance behind them.

Meeting these expectations requires multimodal conversation datasets. Here’s why:

Understanding Beyond Words

Language is inherently ambiguous. The phrase “that’s just great” could express genuine satisfaction or biting sarcasm. Text alone can’t distinguish between them, but the tone of voice makes it immediately clear.

Multimodal conversation datasets teach AI to use all available signals—just like humans do. The frustrated sigh before answering. The brightening face when a solution works. The hesitant pause that signals confusion.

These non-verbal cues carry as much meaning as the words themselves. Research indicates that 93% of communication effectiveness comes from non-verbal elements. AI trained only on text is ignoring 93% of the information.

Capturing Conversational Dynamics

Real conversations aren’t neat turn-by-turn exchanges. People interrupt. They speak simultaneously. They reference previous statements made minutes ago. It use pronouns that only make sense in context.

Multimodal conversation datasets preserve these dynamics. They show AI how conversations actually flow, including:

- How interruptions work and what they signal

- When silence is comfortable versus awkward

- How topic shifts happen naturally

- When and how people repair misunderstandings

These patterns are invisible in text transcripts but critical for natural dialogue systems.

Emotion Recognition and Response

Customer service AI needs to recognize frustration before it escalates. Healthcare chatbots need to detect anxiety or confusion. Educational AI needs to identify when students are struggling.

Emotion recognition requires multimodal data. Facial expressions, vocal prosody, speaking rate, and word choice all contribute to emotional state. A multimodal conversations dataset provides labeled examples of these emotional patterns, teaching AI to recognize and respond appropriately.

Our clients report 40-55% improvements in customer satisfaction scores after training on emotion-rich multimodal conversations datasets. Users feel heard and understood, not just processed.

Building Culturally Intelligent AI

Communication styles vary dramatically across cultures. Direct eye contact is respectful in some cultures, aggressive in others. Silence can signal agreement, disagreement, or deep thought depending on the cultural context.

Multimodal conversations datasets that include diverse cultural backgrounds teach AI these subtleties. This cultural intelligence is essential for global products and increasingly important for diverse domestic markets.

Handling Real-World Complexity

Laboratory conversations are clean. Real-world conversations are messy. Background noise. Multiple speakers. Accented speech. Technical jargon mixed with casual language. Phone audio quality. Video compression artifacts. These real-world conditions need to be present in training data, or your AI will fail when deployed.

Quality multimodal conversations datasets include this messy reality, preparing AI for actual operating conditions rather than idealized scenarios.

The Challenge: Why Multimodal Conversations Datasets Are Scarce

If multimodal conversations datasets are so valuable, why doesn’t everyone have them? Because creating quality datasets is genuinely difficult.

Privacy and Consent Complications

Recording multimodal conversations means capturing faces, voices, and potentially identifiable information. Getting properly informed consent from all participants is complex. Ensuring GDPR, HIPAA, and CCPA compliance adds layers of legal complexity.

Many organizations simply can’t navigate these requirements effectively, leaving them without access to the data they need.

Collection Costs Are Substantial

Quality multimodal recording requires:

- Professional audio and video equipment

- Controlled recording environments

- Participant recruitment and compensation

- Multi-angle video capture for gestures

- High-quality audio to capture vocal nuances

Collecting just 100 hours of multimodal conversations can cost $50,000-$150,000 depending on quality requirements and participant diversity.

Annotation Is Expensive and Time-Intensive

Raw recordings need expert annotation across multiple dimensions. A single hour of conversation might require:

- 8-10 hours for transcript creation and speaker diarization

- 6-8 hours for emotion annotation

- 4-6 hours for intent labeling

- 3-5 hours for discourse relationship marking

- 2-4 hours for quality assurance

That’s 25-35 hours of skilled labor per hour of conversation. For a modest 1,000-hour dataset, you’re looking at 25,000-35,000 annotation hours.

Quality Control Is Complex

Ensuring annotation consistency across annotators and over time requires sophisticated quality assurance processes. Disagreements need resolution protocols. Edge cases need clear guidelines.

Without robust quality control, annotation quality degrades, and with it, model performance.

Domain Expertise Requirements

Annotating medical conversations requires medical knowledge. Legal dialogues need legal expertise. Technical support requires technical understanding. Finding annotators with both domain expertise and annotation skills is challenging and expensive.

Data Scarcity for Specific Use Cases

Even when datasets exist publicly, they often don’t match specific needs. Need customer service conversations in German with elderly speakers? Medical consultations in Arabic? Technical support for IoT devices?

Chances are, no public dataset exists. You’ll need a custom collection, which brings us back to all the challenges above.

This is precisely why we built multimodal data collection and annotation services. We’ve solved these challenges systematically, creating infrastructure and processes that make high-quality multimodal conversations datasets accessible to organizations of all sizes.

How Macgence Solves the Multimodal Conversations Dataset Challenge

We understand the multimodal data challenge intimately because we’ve worked with 200+ AI teams facing exactly these issues. Over five years, we’ve built comprehensive solutions that make quality multimodal conversations datasets accessible and affordable.

Here’s how we help:

Global Multimodal Data Collection

We collect authentic multimodal conversations across 180+ languages and dialects worldwide. Our collection network spans diverse demographics, ensuring your training data represents your actual user base.

Our collection process includes:

- Professional audio-visual recording in controlled or naturalistic environments

- Informed consent and privacy compliance for all participants

- Demographic diversity across age, gender, ethnicity, and background

- Domain-specific scenario design matching your use case

- Quality checks during collection to ensure usable data

Whether you need 100 hours or 10,000 hours, we scale collection to meet your requirements without compromising quality.

Expert Multi-Layer Annotation

Our team of certified annotators provides comprehensive labeling across all modalities:

Text-level annotation:

- Precise transcription with speaker diarization

- Intent classification for each utterance

- Entity recognition and relationship extraction

- Discourse structure and coherence marking

Audio-level annotation:

- Emotion labeling from vocal prosody

- Speaking rate and rhythm analysis

- Vocal quality and tone characterization

- Background noise and acoustic environment tagging

Video-level annotation:

- Facial expression coding (FACS-based)

- Gesture recognition and classification

- Gaze direction and attention tracking

- Body language and posture analysis

Temporal synchronization:

- Cross-modal timestamp alignment

- Turn-taking boundary identification

- Overlap and interruption marking

- Pause and silence duration measurement

We maintain ~95.5% annotation accuracy through multi-stage quality assurance, with every dataset passing through initial annotation, peer review, expert validation, and final quality audit.

Domain-Specific Dataset Creation

Generic datasets rarely meet specific needs effectively. We create custom multimodal conversations datasets tailored to your exact use case.

Recent examples:

- 500 hours of multilingual customer service calls for a European telecom

- 200 hours of patient-doctor consultations for a healthcare AI startup

- 1,000 hours of technical support conversations for a SaaS company

- 300 hours of educational tutoring sessions for an edtech platform

We work with your team to understand your AI’s operating environment, user demographics, and performance requirements. Then we design collection protocols that create data matching those specifications precisely.

Rapid Quality Assurance

Every dataset goes through rigorous validation before delivery:

- Annotation consistency checks across annotators

- Statistical representativeness analysis ensuring balanced coverage

- Edge case identification to verify rare but important scenarios

- Bias detection and mitigation for fair AI performance

- Privacy audits confirming compliance with all regulations

- Technical validation of file formats, synchronization, and metadata

We don’t deliver datasets—we deliver AI-ready, quality-assured training data that works.

Flexible Engagement Models

AI development doesn’t follow predictable timelines. Your data needs will evolve. We offer flexible engagement options:

- Project-based delivery for defined scope requirements

- Ongoing collection partnerships for continuous data needs

- Rapid deployment through our API-integrated platform

- Custom SLAs matching your development schedule

- Scalable capacity from pilot to production volumes

Compliance and Security

We’re ISO-27001, GDPR, and HIPAA compliant. Your data security is fundamental, not optional.

Our security measures include:

- Encrypted data transmission and storage

- Access controls and audit logging

- Secure annotation platforms

- Regular security assessments

- Data residency options for regulatory requirements

We handle sensitive conversational data with the same rigor you would, ensuring privacy and compliance are never compromised.

Real-World Results: Multimodal Conversations Datasets in Action

The impact of quality multimodal conversations datasets shows up in measurable business outcomes. Here’s what our clients experience:

- Healthcare AI Startup. After training on our annotated medical consultation dataset (400 hours, English and Spanish), their diagnostic chatbot’s accuracy improved from 67% to 91%. Patient satisfaction scores increased by 43%. Time-to-diagnosis decreased by 31%.

- Customer Service Platform Using our emotion-rich support conversation dataset across 8 languages, their AI achieved 38% better first-contact resolution. Customer frustration incidents dropped by 52%. Agent escalations decreased by 29%.

- Automotive Voice Assistant Training on our in-vehicle multimodal conversations (noisy environments, multiple speakers, diverse accents), their system’s command recognition accuracy improved from 78% to 94% in real-world conditions. User engagement increased by 67%.

- Educational Technology Company With our tutoring conversation dataset (multi-party, emotion-focused), their AI tutor’s ability to detect student confusion improved by 61%. Learning outcomes increased by 24%. Student engagement rose by 38%.

These aren’t isolated successes—they’re the predictable result of training AI on high-quality multimodal conversations datasets that actually represent real-world usage conditions.

Why Partner with Macgence for Your Multimodal Conversations Dataset Needs

Choosing a data partner is a critical decision that impacts your entire AI development timeline. Here’s what sets Macgence apart:

Proven Track Record

Five years serving 200+ AI companies across healthcare, automotive, finance, retail, and technology sectors. We’ve delivered millions of hours of annotated multimodal data, supporting everything from early-stage startups to Fortune 500 AI initiatives.

Uncompromising Quality

Our 95.5% annotation accuracy isn’t marketing—it’s validated through independent audits and client verification. Multiple quality assurance layers ensure every dataset meets rigorous standards before delivery.

True Multimodal Expertise

Many providers offer text annotation or image labeling. Few can handle the complexity of synchronized multimodal conversations with expert-level annotation across all channels.

Global Scale with Local Expertise

180+ languages. Diverse demographics. Cultural competence. We collect and annotate data worldwide while maintaining consistent quality and compliance standards.

Flexible and Responsive

Your requirements will evolve. We adapt with you, offering flexible engagement models, custom annotation schemas, and responsive support throughout your AI development journey.

Security You Can Trust

ISO-27001, GDPR, HIPAA compliance backed by regular audits and certifications. Your data is protected with enterprise-grade security at every stage.

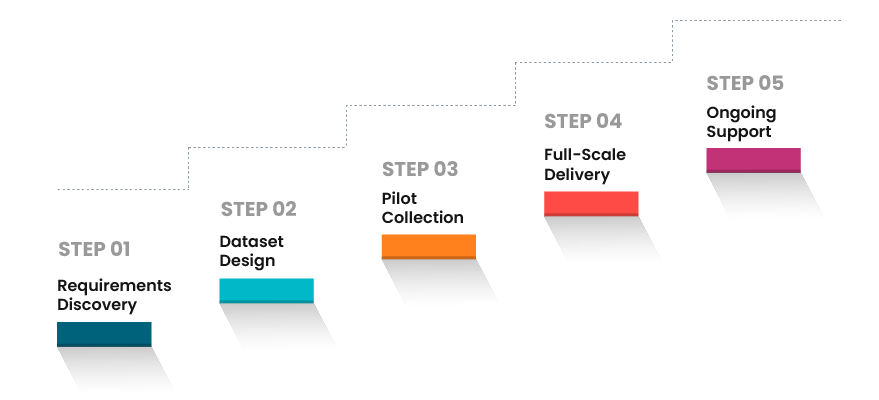

Getting Started: Your Path to Better Multimodal Conversational AI

Transforming your conversational AI starts with better training data. Here’s how we typically engage with new clients:

Step 1: Requirements Discovery We start by understanding your AI’s purpose, target users, operating environment, and performance goals. This shapes everything that follows.

Step 2: Dataset Design Based on your requirements, we design a multimodal conversations dataset specification—including volume, languages, demographics, scenarios, and annotation schemas.

Step 3: Pilot Collection We collect and annotate a small pilot dataset (typically 10-50 hours) for you to evaluate and train initial models. This validates our approach and allows refinement.

Step 4: Full-Scale Delivery Once the pilot is validated, we execute full collection and annotation. Our project management team keeps you informed throughout, with regular quality updates and milestone deliveries.

Step 5: Ongoing Support We don’t disappear after delivery. Our team provides ongoing support, helping you understand dataset characteristics, optimize usage, and expand as your needs evolve.

Conclusion: Multimodal Conversations Datasets Are Your Competitive Advantage

The conversational AI market is increasingly competitive. User expectations are rising. The difference between AI that frustrates users and AI that delights them often comes down to training data quality.

Multimodal conversations datasets provide that quality. They teach AI to understand humans the way humans actually communicate—across multiple channels, with emotion and nuance, in messy real-world conditions.

Companies investing in quality multimodal conversations datasets are building AI that works better, satisfies users more completely, and delivers measurable business value.

At Macgence, we’ve made it our mission to democratize access to world-class multimodal conversational data. Whether you’re a startup with your first AI product or an enterprise scaling global conversational systems, we have the expertise, infrastructure, and commitment to support your success.

Ready to transform your conversational AI with professional-grade multimodal conversations datasets?

Let’s discuss your specific requirements. Our team will design a data solution that accelerates your development, ensures quality, and positions your AI for real-world success.

Contact Macgence today and discover how the right multimodal conversations dataset can transform your AI from adequate to exceptional.

You Might Like

October 10, 2025

Why Your Self-Driving Car Needs Perfect Vision: The LiDAR Annotation Story

Imagine you’re driving down a busy street. Your eyes are constantly scanning – pedestrians crossing, cars merging, cyclists weaving through traffic. Now imagine teaching a machine to do the same thing, except it doesn’t have eyes. It has lasers. And those lasers need to understand what they’re “seeing.” We’ve seen many product launches that aim […]

October 9, 2025

What is Synthetic Datasets? Is it real data or fake?

Picture this: You’re building the next breakthrough AI product. Your models need millions of data points to learn. But there’s a problem. You can’t access enough real-world data due to various factors, such as compliance issues, security factors, and specific needs. Privacy regulations block you. Collection costs are sky-high. And even when you get data, […]

October 6, 2025

What Are the Most User-Friendly Geospatial AI Solutions?

Finding the correct geospatial AI platform should not feel like navigating a maze. Yet, for product managers and CTOs, choosing solutions that strike a balance between power and usability often becomes the biggest challenge in deploying location-based intelligence. The geospatial AI market is estimated to reach $2.3 billion by 2027, but here’s the one thing: […]